Michael Arteaga, and Rebecca E. Grinter from Georgia Tech.

Since people are using computers to help with religious and spiritual practices, designers need to learn to design for these faith-related purposes. The authors of this paper present a design for mobile phones that help Islamic practices.

Design information for faith-related purposes is hard to come by, but people use computers for such purposes. For example, Muslims, have to pray 5 times a day. Certainly a phone application to help remind them would be useful, although if they pray 5 times a day, they should be used to it. They use some applications such as camera phones already to take pictures of shrines when they visit and they pray to the pictures. Meanwhile, Christians use applications to help them learn new Bible verses.

There are about 2.3 million Muslims in the US, and the religion is growing. They've always used compasses to determine the location of Mecca, and sun-dials to see what time it was so that they might pray at the right time. About 15 minutes before it's time to pray, they begin by washing themselves and other rituals. In Muslim countries, television stations halt broadcasts during these times. Finally, it's where the sun is at that tells EXACTLY when to pray.

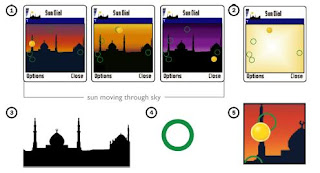

This program helps the users see where the sun is to pinpoint when they should get ready to perform their rituals and pray. And to mimic nature, the sky changes colors as the sun moves across the sky. And there are sillouettes of mosques in the background so that users get a feeling for religion. It helps them reflect on their faith. This imagery is much better suited for it's use than a simple beep for an alarm which lets Muslims know when it's time to pray. The imagery helps them reflect on their faith and feel more natural than an alarm telling them to pray.

There are many other religions which could benefit such as Christians using a crucifix on their screen to help them remember to be thankful for the sacrifice that Christ made. This is in the future works hopefully.